How to Build a Custom AI Model: A Step-by-Step Guide

By Suffescom Solutions

April 23, 2025

Artificial intelligence (AI) has evolved from a concept in science fiction into a pragmatic tool used across various industries. Fueled by progress in machine learning algorithms, big data analytics, cloud computing, and increasingly affordable hardware, AI is shaping a remarkable landscape that changes our interactions with software and devices. Nevertheless, learning how to create AI can appear daunting.

An AI model is a powerful tool for streamlining complex tasks and augmenting human capabilities, opening up new avenues for efficiency and precision. Its applications span various industries, from financial forecasting to medical diagnostics, showcasing the boundless potential of AI models. This guide shows how to build AI models that drive business innovation.

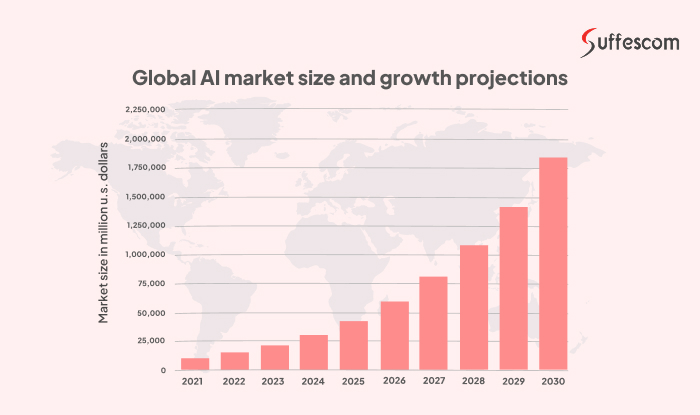

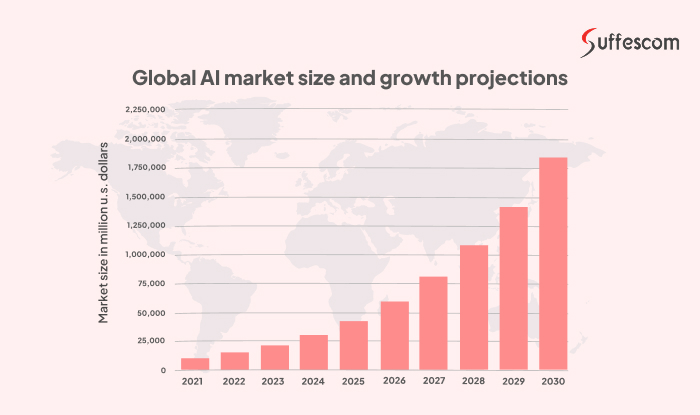

- Market Size between 2025 and 2030: The AI market size is projected to grow at a compound annual growth rate (CAGR) of 35.9% from 2025 to 2030. - Source - grandviewresearch

- Software Segment: The AI software market alone is anticipated to reach USD 391.43 billion by 2030, growing at a CAGR of 30% from 2024. - Source - abiresearch

What is an AI Model?

AI stands for Artificial Intelligence and is an excellent ally for businesses seeking to enhance productivity through informed decision-making. By collaborating with data analysts, scientists, and developers, various AI models have been created to forecast industry results. An AI model is a trained computer program that can independently execute specific tasks and make decisions, rather than merely mimicking human intelligence. AI developers input high-quality historical data to train the system, enabling it to identify patterns, trends, and relationships in diverse scenarios and to make precise and relevant decisions.

For instance, banks can develop AI models to forecast fraud by analyzing consumer behavior data and identifying specific actions that are likely to lead to fraudulent transactions. These models could subsequently anticipate the likelihood of fraud reoccurring when similar conditions arise.

Key Components Needed to Build a Custom AI Model

Creating a custom AI model requires a structured approach that combines technical resources, data, and expertise. Partnering with an experienced AI development company can streamline this process by offering specialized knowledge and tools essential for developing AI models successfully, all of which are explained in a clear and accessible manner for a broad audience.

1. Clear Objective and Problem Definition

Every AI model starts with a well-defined goal. Whether it's predicting customer behavior, automating tasks, or analyzing images, the problem must be specific. For example, instead of aiming for "improving sales," focus on "predicting which customers are likely to churn within the next 30 days." A clear objective guides the choice of data, algorithms, and evaluation metrics.

2. Quality Data

Quality data is crucial for AI models to learn effectively. It must be relevant, clean, sufficient, and diverse to capture real-world patterns and avoid bias. For example, facial recognition models need thousands of labeled images from varied demographics, sourced from public, proprietary, or synthetic data.

3. Computational Resources

AI models, especially those needing deep learning, require high computing power. CPUs handle general tasks, while GPUs and TPUs accelerate complex computations in neural networks. Cloud platforms, such as Amazon Web Services (AWS), Google Cloud, and Microsoft Azure, provide scalable resources. Small projects can be run on laptops with good graphics processing units (GPUs), but larger models require cloud clusters or specialized hardware.

4. Algorithms and Frameworks

The algorithm determines how an AI model learns from data: supervised for labeled data, unsupervised for pattern discovery, and reinforcement for decision-making. Frameworks like TensorFlow, PyTorch, Scikit-learn, and Hugging Face simplify implementation. You can use open-source tools like TensorFlow to reduce costs.

5. Skilled Team

AI model customization relies on collaboration among specialists to ensure both technical accuracy and practical relevance. Data scientists design and train models, data engineers handle data pipelines, domain experts provide industry context, and software engineers integrate models into applications.

6. Training and Validation Process

Creating Your Own AI Model requires training an AI model, which involves feeding it data and adjusting parameters to reduce errors. The data is split into training, validation, and test sets to optimize, tune, and evaluate the model. Techniques like cross-validation and regularization help prevent overfitting, ensuring good performance on new data.

7. Evaluation Metrics

Metrics evaluate model performance and should match the task. Accuracy is suitable for classification, precision and recall help with imbalanced data, and mean squared error is well-suited for regression. For example, in medical diagnosis, high recall is crucial to catch all actual cases.

8. Deployment and Monitoring

After training, the model is deployed into real-world systems like apps or web services. This involves integration via APIs, ensuring scalability for real-time use, and ongoing monitoring to update the model as data changes, like a recommendation system adapting to user preferences.

9. Ethical Considerations

AI models can raise issues like bias and privacy concerns. To address this, ensure fairness by testing across demographics, maintain transparency by clearly explaining decisions, and adhere to data privacy laws, such as the General Data Protection Regulation (GDPR).

How the Five-Layer Model Optimizes Enterprise AI Systems

To build a cohesive AI system, enterprise AI architecture typically comprises multiple layers. The five-layer model is a popular strategy that divides the various parts of an AI system into distinct levels, each with its own function. The five-layer enterprise AI architecture paradigm is described in the following way:

Data Science Layer – Where Intelligence is Built

The Data Science Layer is where raw data is transformed into intelligence through analytics and model development. It involves feature engineering, model training, algorithm experimentation, and performance evaluation. Tools such as Jupyter, RStudio, TensorFlow, and Scikit-learn support this work, with data scientists ensuring that models align with business goals.

Technology Layer – Infrastructure for Scalability

The Technology Layer enables the deployment of custom AI models by providing essential hardware, software, and cloud infrastructure. It includes compute resources, model hosting platforms like AWS SageMaker and Azure ML, MLOps pipelines for CI/CD, and APIs for integration. This layer ensures the scalability and reliability of AI systems across the organization.

Business Logic Layer – Customization and Control

The Business Logic Layer ensures AI aligns with business rules and workflows. It defines how AI outputs are used, such as mapping predictions to actions, setting decision thresholds, and integrating with systems like CRM or ERP. It also manages user roles and governance, like how a fraud detection model might flag transactions for review or automatic blocking.

Interaction Layer – The Human-AI Interface

The topmost layer is the user interface, where people interact with the AI system through dashboards, chatbots, or apps. It focuses on intuitive UI/UX, data visualization tools like Power BI, NLP for conversational AI, and feedback loops. User adoption is critical—if insights aren't easy to understand or use, the model's value is lost.

Security & Governance Layer – Ensuring Trust & Compliance

The Security & Governance Layer ensures that enterprise AI systems are safe, ethical, and compliant by enforcing data privacy, model transparency, risk management, and adherence to regulations. It covers access control, encryption, bias monitoring, and ethical oversight using tools such as Identity and Access Management (IAM) systems (Okta, Azure AD), data governance platforms such as Collibra, and Alation.

How to Choose the Right AI Model Type?

When it comes to new product development and identifying growth opportunities, two broad approaches can be explored: choosing the right AI model type and training methodology.

AI Model Type

| Description

| Common Use Cases | Business Impact / Example

|

| Supervised Learning | Learns from labeled datasets by minimizing error through a loss function. | Fraud detection, sentiment analysis, and customer segmentation | A retail company reduced customer churn by 15%, resulting in an annual savings of $2 million. |

| Unsupervised Learning | Finds hidden patterns in data without pre-labeled inputs. | Market basket analysis, customer profiling, anomaly detection | Enables deep insights into customer behavior and operations. |

| Semi-supervised Learning | Combines small amounts of labeled data with large volumes of unlabeled data. | Medical imaging, document classification | Cost-effective when labeling data is expensive or time-consuming. |

Reinforcement Learning

| Learns optimal behaviors through interaction with the environment using rewards and penalties. | Robotics, dynamic pricing, and AI Game Development | Enhances real-time decision-making and automation capabilities. |

| Self-supervised Learning | Generates its own labels from raw input data, commonly used in NLP and computer vision. | Document summarization, language translation, and visual recognition | Powers large language models, such as GPT and BERT, enabling advanced text understanding. |

| Generative Models | Creates new content by learning underlying patterns in the training data.

| Content creation, marketing design, and product prototyping | Automates creative tasks, accelerating content generation and prototyping. |

| Transfer Learning | Fine-tunes existing models on specific tasks using limited data, saving time and resources. | Domain-specific AI solutions, small dataset training | Reduces development time and cost, especially in niche or data-scarce domains. |

| Federated Learning | Trains models across decentralized devices without centralizing data, ensuring user privacy. | Healthcare diagnostics, mobile device personalization | A healthcare provider utilised this model to enhance secure diagnostics and maintain compliance. |

Step-by-Step Approach to Custom AI Model Development

In the digital age, artificial intelligence (AI) has evolved into a strategic enabler for enterprises, reshaping how organizations interact with data, customers, and markets. However, building a custom AI model tailored to your business requirements is not a one-size-fits-all process. It involves a systematic, well-governed approach—from identifying the business problem to deploying a scalable solution that evolves with your needs. Here's a comprehensive breakdown of how businesses can successfully develop and implement AI models.

Step 1: Define a Clear Business Objective

Start with a specific, measurable goal that aligns with your business strategy. Identify the end-users, affected processes, and the expected value. Define KPIs such as accuracy, cost reduction, or process time saved. Clear objectives ensure every technical decision drives tangible business outcomes.

Step 2: Data Collection and Preparation

Gather data from relevant internal systems, APIs, sensors, or public sources. Ensure it's complete, accurate, and diverse enough to represent real-world scenarios. Clean and normalize the data, encode categorical features, and label them if needed. Maintain regulatory compliance (GDPR, HIPAA) throughout the process.

Step 3: Select the Appropriate Model Type

Choose the model type based on the nature of your problem: classification, clustering, recommendation, or decision-making. Options include supervised, unsupervised, reinforcement, self-supervised, or transfer learning. Use federated learning for privacy-preserving applications. Matching model type to use case is key to effectiveness.

Step 4: Choose Tools, Frameworks, and Infrastructure

Select development frameworks like TensorFlow, PyTorch, or Scikit-learn based on your project scope. Depending on the sensitivity of the data, opt for cloud platforms (AWS SageMaker, Azure ML, Google Vertex AI) or on-premises solutions. Implement MLOps tools to automate version control, model retraining, and continuous integration/continuous deployment (CI/CD) pipelines.

Step 5: Train the Model

Split your dataset into training, validation, and test sets. Train the model using optimization algorithms like gradient descent while tuning hyperparameters for the best results. Apply cross-validation and regularization techniques to avoid overfitting. Utilize GPUs/TPUs for efficient computation in large-scale training.

Step 6: Model Evaluation and Validation

Test your model on unseen data to measure accuracy, precision, recall, or mean squared error (MSE), depending on the problem type. Evaluate its generalizability and ensure it works across all target user groups. Identify any bias or performance issues across demographics. An intense evaluation phase ensures real-world reliability.

Step 7: Deployment into Production

Depending on business needs, deploy the model via batch or real-time inference. REST APIs are used for application integration, and models can be containerized using Docker or Kubernetes for scalability. Ensure robust logging, uptime monitoring, and fallback mechanisms. Deployment must align with your tech environment and user workflow.

Step 8: Monitor and Maintain the Model

Track performance KPIs, prediction quality, and runtime metrics like latency. Use alerts or dashboards to monitor for data and concept drift. Automate retraining pipelines to ensure the model adapts over time. Use tools like MLflow or Weights & Biases for ongoing experiment tracking and version control.

Step 9: Address Ethical, Legal, and Governance Considerations

Ensure transparency in decision-making by utilising explainability tools such as SHAP or LIME. Regularly test for and mitigate bias in predictions to ensure accuracy and reliability. Encrypt sensitive data, enforce strict access controls, and document compliance with regulations such as the CCPA, SOC 2, or HIPAA. Responsible AI practices build user trust and reduce risk.

Step 10: Continuously Improve and Scale

Use feedback loops to enhance performance based on real-world usage. Expand the model to support additional departments, geographies, or languages. To increase value, combined with NLP, computer vision, or other AI capabilities. Treat AI as a living asset that evolves alongside your business.

Cost of Building Custom AI Models for Your Business

The cost of building a custom AI model varies significantly based on the complexity of the problem, the volume and quality of data, the technology stack, and the expertise of the development team. While some AI solutions can be developed with minimal investment using pre-trained models and open-source tools, enterprise-grade AI systems with advanced features and scalability requirements involve substantial investment.

Key Factors Affecting AI Model Development Cost

Project Scope and Complexity

The more complex the use case (e.g., real-time fraud detection vs. simple customer segmentation), the higher the costs. Custom logic, integration with existing systems, and real-time capabilities all contribute to increased development time and cost.

Data Collection and Processing

Acquiring, cleaning, labeling, and preparing data can account for 20–40% of the total project budget. The cost increases with the need for large, diverse, or domain-specific datasets, particularly in fields like healthcare or finance.

Technology and Infrastructure

Cloud-based AI development with services like AWS SageMaker, Google Cloud AI, or Azure ML often involves pay-as-you-go pricing. Alternatively, on-premise setups may require significant upfront hardware investment. GPU/TPU usage, storage, bandwidth, and API integrations also impact cost.

Development Team Composition

A comprehensive AI team typically includes:

- Data scientists and ML engineers

- Software developers

- Data engineers

- Domain experts

- Project managers

Hiring in-house talent or partnering with an experienced AI consulting company can impact both the cost and speed to market.

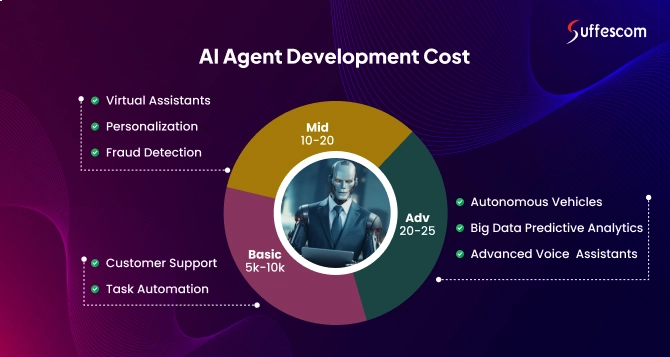

Timeframe and Maintenance

A basic AI MVP may take 4–6 weeks and cost between $10,000 and $30,000, whereas enterprise-level solutions with end-to-end MLOps pipelines and robust governance may incur higher costs. Post-deployment, businesses must also budget for model monitoring, periodic retraining, and technical support.

Estimate Cost Breakdown of Building Custom AI Models

| Component | Estimated Cost Range

|

Problem Scoping & Planning

| $5,000 – $15,000

|

Data Acquisition & Preparation

| $10,000 – $20,000 |

| Model Design & Training | $5,000 – $10,000 |

| Testing & Validation | $5,000 – $15,000 |

| Deployment & Integration | $10,000 – $15,000 |

| Ongoing Maintenance (annual) | $5,000 – $10,000+ |

Final Thoughts

Building an intelligent AI model is not just about algorithms—it's a strategic investment that combines data, technology, people, and processes to drive effective outcomes. Enterprises that align AI development with their core objectives, deploy robust architectures, and adopt an ethical approach to AI will gain a competitive advantage in the years to come.

By understanding the layers, model types, and development workflow, businesses can harness AI for not only automation but also innovation, resilience, and growth in a rapidly evolving digital economy.

Ready to build your AI model? Start by identifying one high-impact problem in your business and gathering relevant data.

FAQs

1. What types of business problems can AI models solve?

Applications of custom AI models can be tailored to address a wide range of business problems, including customer churn prediction, fraud detection, supply chain optimization, demand forecasting, document automation, sentiment analysis, and personalized marketing. The key is identifying high-impact, data-driven use cases aligned with your business goals.

2. How much data do we need to train a reliable AI model?

The amount of data required depends on the complexity of the problem and the type of model used. Simple models may require thousands of records, while deep learning models can require millions. However, with techniques such as transfer learning and data augmentation, even small or medium-sized datasets can yield effective results.

3. How long does it take to build and deploy an AI model?

The development timeline can range from a few weeks to several months, depending on the project's scope, data readiness, and complexity of the AI solution. A basic model with available data might take 4–6 weeks, while enterprise-scale solutions with custom integrations may take 3–6 months or more.

4. How do you ensure our AI model stays accurate over time?

We implement continuous monitoring and model maintenance practices to detect data or concept drift. Periodic retraining using fresh data, performance audits, and feedback loops is established to ensure sustained accuracy and relevance over time.

5. What security and compliance measures do you follow?

We adhere to industry-standard practices, including data anonymization, encryption, and role-based access control. Our solutions are designed to comply with regulations such as GDPR, HIPAA, and CCPA, ensuring data privacy, model transparency, and ethical AI governance.

Hire A Developer